4.3.3 Configure inventory files

Configure the Ansible inventory files for your environments

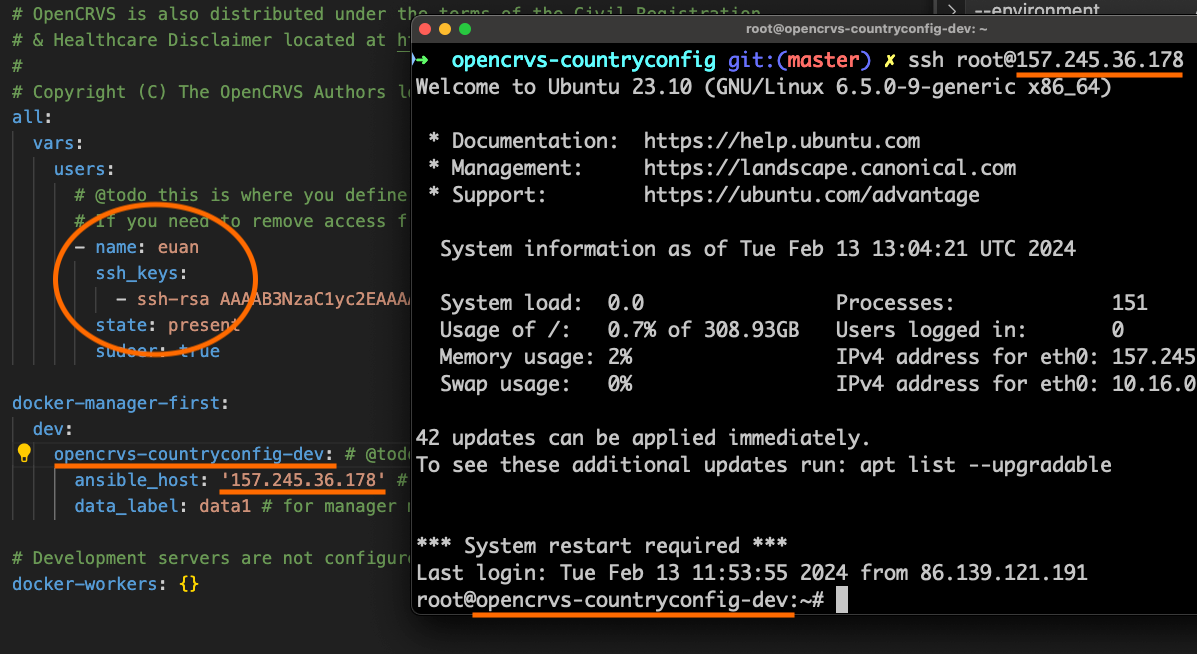

Starting with the development.yml file ...

all:

vars:

users:

# @todo this is where you define which development team members have access to the server.

# If you need to remove access from someone, do not remove them from this list, but instead set their state: absent

- name: <REPLACE WITH A USERNAME FOR YOUR SSH USER>

ssh_keys:

- <REPLACE WITH THE USER'S PUBLIC SSH KEY>

state: present

sudoer: true

enable_backups: false

docker-manager-first:

hosts:

<REPLACE WITH THE SERVER HOSTNAME>: # @todo set this to be the hostname of your target server

ansible_host: '<REPLACE WITH THE IP ADDRESS>' # @todo set this to be the IP address of your server

data_label: data1 # for manager machines, this should always be "data1"

# Development servers are not configured to use workers.

docker-workers: {}

Next, observe the qa.yml file ...

Next, observe the backup.yml file ...

Next, observe the staging.yml file ...

Finally, observe the production.yml file ...

Last updated